My love for FFmpeg knows no bounds and whenever I need to do some slick slicing & splicing I always go with FFmpeg (or Avidemux).

Command line is bash and you’ll obviously need FFmpeg.

Turning a video into a high quality GIF

Turning a video that is over 256 colors into a GIF is an impossible task if you wish to keep all the color information, the GIF format just isn’t designed for that at all.

But what if there was a way to still convert the video into an acceptable GIF?

ffmpeg -i input.mp4 \

-vf "split[s0][s1];[s0]palettegen[p];[s1][p]paletteuse" \

-loop 0 output.gif

It is possible to generate a color palette with FFmpeg and use it (thanks to a SuperUser post).

The explanation for the video filters used boils down to:

palettegen&paletteusegenerate and use the generated custom palette;splithelps making this command a one liner because the usual way would be to output the palette to a file before using it;loopset to0means we have a repeating GIF, otherwise it will loop with the provided number (and no loop is playing only once).

With a complex filter it’s possible accelerate the gif too:

ffmpeg -i input.mp4 \

-filter_complex "split[s0][s1];[s0]palettegen[p];[s1]setpts=0.5*PTS[s1_fast];[s1_fast][p]paletteuse" \

-loop 0 output.gif

But my source is high resolution, can I scale it down? Yes!

ffmpeg -i input.mp4 \

-vf "scale=320:-1:flags=lanczos,split[s0][s1];[s0]palettegen[p];[s1][p]paletteuse" \

-loop 0 output.gif

The scale filter can be set to keep the aspect ratio with -1 like in this case with 320:-1 but that’s not all ! We can also reduce the framerate by prepending fps=10.

While I understand that 10 FPS is pretty low, this is GIF territory so it’s not the best thing ever en 2024+.

Keep in mind that GIFs at 60FPS don’t perform well and are pretty heavy on space. So that why you should reduce the framerate with the -r parameter or in the -vf parameter.

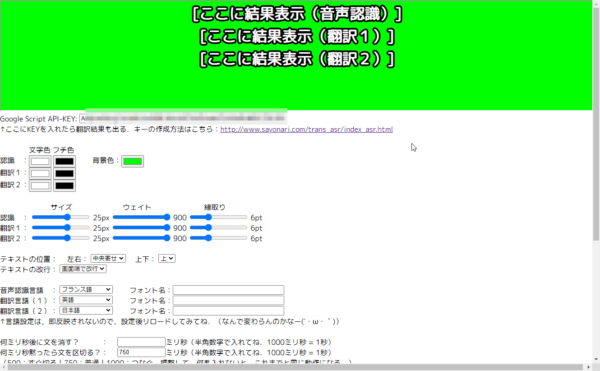

Scroll text on a video

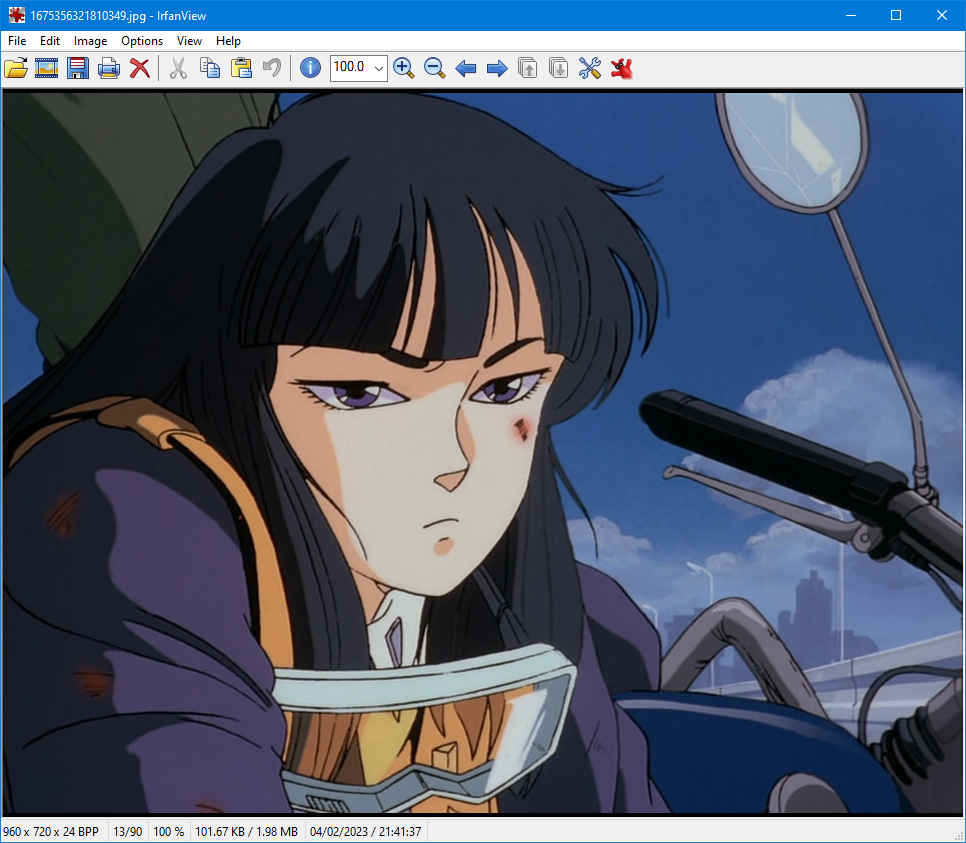

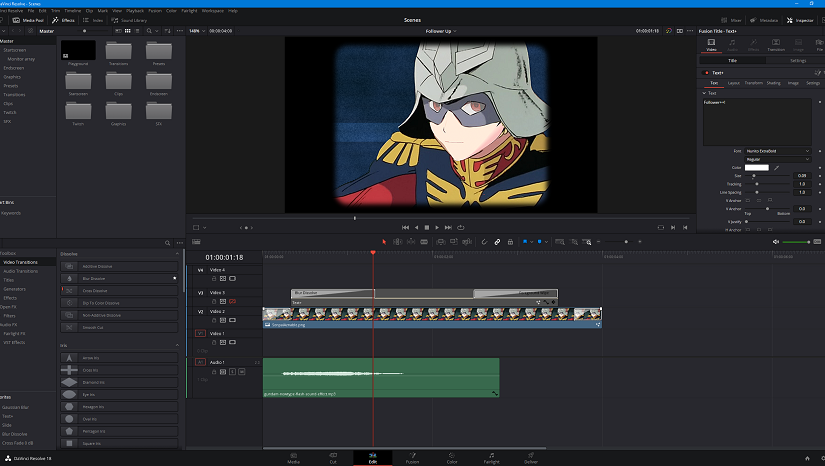

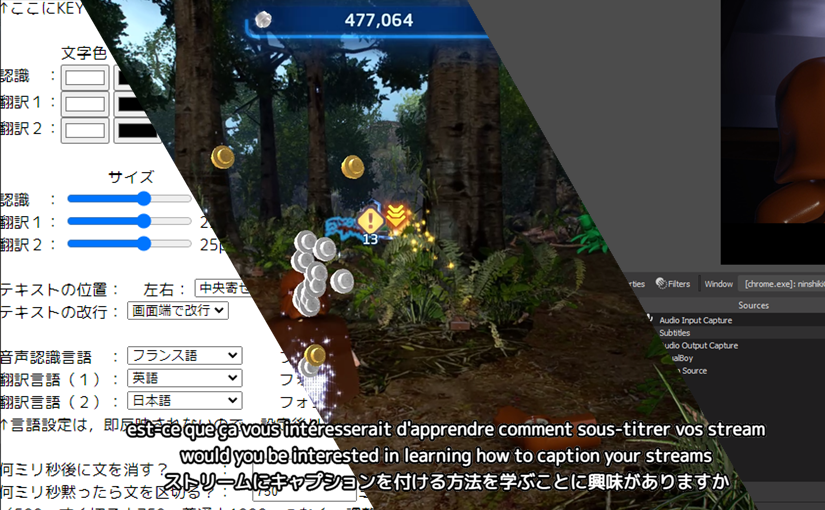

This is one part of the thing I do on the music videos I download from YouTube to use while I’m streaming (of course the audio never makes it in the VOD for obvious DMCA reasons).

For the story I decided to display part of the video on my overlay but have the title scroll (when it’s wider than the video). I won’t be sharing my script but I will be sharing the command line I use with FFmpeg to make text scroll.

# Do not forget to escape quotes, commas and colons too

TITLE="My super song\\: Ayyy LMAO - By the \\\"Senpai\\\""

ffmpeg -i input.mp4 \

-acodec copy -vcodec h264_nvenc \

-vf "drawtext=text=\'${TITLE}\':fontfile=./NotoSansJP-VariableFont_wght.ttf:y=(h-text_h)/2:x=w-mod(max(t-0\\,0)*(120)\\, 2*(tw+150)):fontcolor=ffcc00:fontsize=70:shadowx=2:shadowy=2:box=1:boxborderw=3:boxcolor=#000000AA" \

-b:v 8M -rc vbr -cq 19 -preset slow -profile:v main \

output.mp4

To avoid a gap in the scrolling it’s possible to add a second filter that will be delayed to avoid having a small gap:

# Do not forget to escape quotes, commas and colons too

TITLE="My super song\\: Ayyy LMAO - By the \\\"Senpai\\\""

ffmpeg -i input.mp4 \

-acodec copy -vcodec h264_nvenc \

-vf "drawtext=text=\'${TITLE}\':fontfile=./NotoSansJP-VariableFont_wght.ttf:y=(h-text_h)/2:x=w-mod(max(t\\,0)*(120)\\, 2*(tw+150)):fontcolor=ffcc00:fontsize=70:shadowx=2:shadowy=2:box=1:boxborderw=3:boxcolor=#000000AA,drawtext=text=\'${TITLE}\':fontfile=./NotoSansJP-VariableFont_wght.ttf:y=(h-text_h)/2:x=w-mod(max(t-(tw+150)/(120)-0\\,0)*(120)\\, 2*(tw+150)):fontcolor=ffcc00:fontsize=70:shadowx=2:shadowy=2:box=1:boxborderw=3:boxcolor=#000000AA" \

-b:v 8M -rc vbr -cq 19 -preset slow -profile:v main \

output.mp4

Of course you’ll need to encode the video and have the appropriate fonts installed for this to work. The original audio has been kept because we do not need to re-encode it.

Let’s breakdown the filter element by element as it is really messy:

drawtext=

text=\'${TITLE}\'

fontfile=./NotoSansJP-VariableFont_wght.ttf

y=(h-text_h)/2

x=w-mod(max(t\\,0)*(120)\\, 2*(tw+150))

First we use the filter called drawtext and it will take multiple parameters:

textwill be the input text, as we are passing it from the command line we need to escape the quotes, colons and commas.fontfileis a relative path to a font, in my case I went with NotoSansJP as it has support for Japanese characters too;x&yare the position of the text and instead of using a static position we pass a formula:wstands for the width of the video;twortext_widthis the width of the rendered text;text_horthis the height of the rendered text;trepresents the timestamp in seconds and can be used for math operations, in this case we use it make the text scroll at a proper speed;- Doing

(h-text_h)/2simply means we are centering text horizontally.

- The other parameters are not really important.

The trick to having scrolling text with no gap if by having the same filter repeated a second time but with a delayed x parameter as such:

x=w-mod(max(t-(tw+150)/(120)\\,0)*(120)\\, 2*(tw+150))

In this case the 150 we had as the second parameter to mod is used in the first parameter with the text width parameter. In fact there’s no delay, the text is just positioned off screen. The following values can be called:

150: Margin;120: Speed;0: Start time offset.

For a simplified x parameter (pseudo code explanation):

MARGIN=150

SPEED=120

# First title

x=

w-mod(

max(t, 0) * (${SPEED}), 2 * (tw+${MARGIN})

)

# Second title

x=

w-mod(

max(t - (tw + ${MARGIN}) / (${SPEED}), 0) * (${SPEED}), 2 * (tw + ${MARGIN})

)

It’s confusing but that’s how it works:

# Do not forget to escape quotes, commas and colons too

TITLE="My super song\\: Ayyy LMAO - By the \\\"Senpai\\\""

MARGIN=150

SPEED=120

ffmpeg -i input.mp4 \

-acodec copy -vcodec h264_nvenc \

-vf "drawtext=text=\'${TITLE}\':fontfile=./NotoSansJP-VariableFont_wght.ttf:y=(h-text_h)/2:x=w-mod(max(t-0\\,0)*(${SPEED})\\, 2*(tw+${MARGIN})):fontcolor=ffcc00:fontsize=70:shadowx=2:shadowy=2:box=1:boxborderw=3:boxcolor=#000000AA,drawtext=text=\'${TITLE}\':fontfile=./NotoSansJP-VariableFont_wght.ttf:y=(h-text_h)/2:x=w-mod(max(t-(tw+${MARGIN})/(${SPEED})-0\\,0)*(${SPEED})\\, 2*(tw+${MARGIN})):fontcolor=ffcc00:fontsize=70:shadowx=2:shadowy=2:box=1:boxborderw=3:boxcolor=#000000AA" \

-b:v 8M -rc vbr -cq 19 -preset slow -profile:v main \

output.mp4

In testing it appeared that sometimes long titles might glitch out a bit and not have a background. Please do some testing and tweak the commands as needed to fill your needs.

Two scrolling titles might not be needed anyway!

Resize the video

Part two of what I do the YouTube music video I use on my stream, I resize them to an appropriate size so it doesn’t take all the screen but still keeps the proper aspect ratio.

ffmpeg input.mp4 \

-acodec copy -vcodec h264_nvenc \

-vf "scale=640:-1,crop=in_w:120:0:in_h/2" \

-b:v 8M -rc vbr -cq 19 -preset slow -profile:v main \

output.mp4

In this case I’ve used my GPU to do some encoding, feel free to encode however you want. The original audio has been kept because we do not need to re-encode it.

You can combine the filter that adds the scrolling text with the resized output but ordering as follows:

- Resize the video;

- Use a comma

,to separate filters; - Add the scrolling text.

It’s important to keep things in order and you’ll have this big fat command that I’ve reworked a bit to make it easier to appreciate:

# Do not forget to escape quotes, commas and colons too

TITLE="My super song\\: Ayyy LMAO - By the \\\"Senpai\\\""

MARGIN=150

SPEED=120

# Font and box parameters

FONTFILE="./NotoSansJP-VariableFont_wght.ttf"

FONTCOLOR="ffcc00"

BOXCOLOR="000000AA"

FONTPARAMS="fontcolor=${FONTCOLOR}:fontsize=70:shadowx=2:shadowy=2:box=1:boxborderw=3:boxcolor=#${BOXCOLOR}"

# Title scrolling

TITLE_ONE="y=(h-text_h)/2:x=w-mod(max(t-0\\,0)*(${SPEED})\\, 2*(tw+${MARGIN}))"

TITLE_TWO="y=(h-text_h)/2:x=w-mod(max(t-(tw+${MARGIN})/(${SPEED})-0\\,0)*(${SPEED})\\, 2*(tw+${MARGIN}))"

# Scaling (+lanczos as an example) and cropping

RESIZE_LANCZOS="scale=640:-1,crop=in_w:120:0:in_h/2"

RESIZE="scale=640:-1:flags=lanczos,crop=in_w:120:0:in_h/2"

ffmpeg -i input.mp4 \

-acodec copy -vcodec h264_nvenc \

-vf "${RESIZE},drawtext=text=\'${TITLE}\':fontfile=${FONTFILE}:${TITLE_ONE}:${FONTPARAMS},drawtext=text=\'${TITLE}\':fontfile=${FONTFILE}:${TITLE_TWO}:${FONTPARAMS}" \

-b:v 8M -rc vbr -cq 19 -preset slow -profile:v main \

output.mp4

And this was my secret behind the resized, cropped music videos with scrolling text.

Homework: Combine EVERYTHING into a GIF

It’s as simple as adding the full filter for generating the palette and the gif from the palette. I kid you not! But this is your homework on how to make that work: just don’t forget to remove audio and video encoding.

Beyond infinity

I’d recommend to check ffmprovisr which is a very good guide on how to do some things.

As I don’t support AI I can’t really recommend using ChatGPT to write FFmpeg commands. It might help you with some stuff keep in mind that it can be confidently wrong.